By Nick Statt@nickstatt

(first published by Nov 21, 2017)

As at late November 2017 – 5 months ago, Facebook had continued to let advertisers racially discriminate in housing ads. If the company still lets people exclude minority groups in housing ads, this is a violation of federal law.

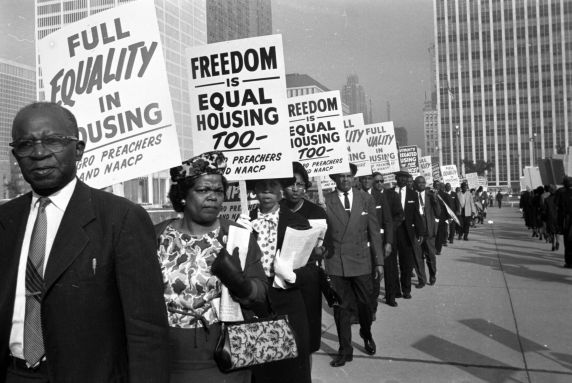

One year after a ProPublica investigation found that Facebook lets housing advertisers exclude users by race, a separate follow-up investigation found that the social network has barely changed any of its practices. The new story, published today, details how ProPublica was able to purchase targeted housing ads that excluded groups like African-Americans, Jews, and Spanish speakers, among others. Such ad targeting for housing is a violation of the federal Fair Housing Act, because of long-standing discriminatory practices in the real estate and rental industries that disadvantage black, Asian, and Latino renters.

Facebook, which just earlier this month launched a revamped housing category on its new Craigslist competitor, owned up to its mistake in a statement from Ami Vora, a vice president of product management at Facebook, given to the The Verge:

This was a failure in our enforcement and we’re disappointed that we fell short of our commitments. Earlier this year, we added additional safeguards to protect against the abuse of our multicultural affinity tools to facilitate discrimination in housing, credit and employment. The rental housing ads purchased by ProPublica should have but did not trigger the extra review and certifications we put in place due to a technical failure. Our safeguards, including additional human reviewers and machine learning systems have successfully flagged millions of ads and their effectiveness has improved over time.

Tens of thousands of advertisers have confirmed compliance with our tighter restrictions, including that they follow all applicable laws. We don’t want Facebook to be used for discrimination and will continue to strengthen our policies, hire more ad reviewers, and refine machine learning tools to help detect violations. Our systems continue to improve but we can do better. While we currently require compliance notifications of advertisers that seek to place ads for housing, employment, and credit opportunities, we will extend this requirement to all advertisers who choose to exclude some users from seeing their ads on Facebook to also confirm their compliance with our anti-discrimination policies — and the law.

The investigation is just the latest in a series of reports detailing how Facebook’s algorithm-driven ad network is susceptible to bad actors, including foreign governments and propaganda-peddling fake news machines.

But the investigation found that the company, despite claims to update policies and increase enforcement of its rules, did very little to substantially alter how housing ads are placed on Facebook. The company said all the way back in November of last year, after ProPublica’s initial report, that it would no longer allow ads for housing, credit, or employment that target based on “ethnic affinity,” the loose term Facebook uses to identify race-related profiles built using user-reported information.

FACEBOOK APPEARS TO HAVE ONLY MADE COSMETIC CHANGES ITS AD-TARGETING SYSTEM

In February of this year, Facebook updated its ad policies further to “make it clear that advertisers may not discriminate against people based on personal attributes such as race, ethnicity, color, national origin, religion, age, sex, sexual orientation, gender identity, family status, disability, medical or genetic condition.” Yet ProPublica found that you are still able to do just that, despite Facebook’s new algorithmic review system that is supposedly designed to flag these ads. Nearly all of the test ads, which were immediately removed following the investigation, were approved within minutes.

In fact, the only meaningful change Facebook appears to have made is a cosmetic one: the company renamed “ethnic affinity” to “multicultural affinity” and relocated the category under “Behaviors.” (Previously it was listed under “Demographics.”) In other words, Facebook simply reframed the concept of race as something its user exhibit as a behavioral trait, which doesn’t seem to have a meaningful distinction from what it used to describe.

The company has historically gone to great lengths to avoid saying advertisers can target based on race, using euphemisms like ethnic affinity. Still, advertisers are widely able to read between the lines, like when Universal Pictures marketed different Straight Outta Compton trailers last year for the “white” and the “African-American” ethnic affinities.

While it’s not illegal to perform this type of targeting for films — companies to do so all the time on television — housing is a different story. The Fair Housing Act prohibits discriminatory housing ads alongside those for employment and credit. However, the US Department of Housing and Urban Development, now headed by Trump-appointed Ben Carson, has turned a blind eye toward Facebook, closing its inquiry into the company’s practices. That means it’s largely up to investigative journalists, watch dogs, and advocacy groups to hold the company accountable.

In one particularly egregious example, ProPublica was even able to go so far as drafting housing ads based on New York City ZIP codes, cross-checking the neighbourhoods with US Census data to ensure the ad would be excluding predominantly black and Hispanic neighbourhoods. The discriminatory practice is known as redlining and it stretches back decades in America, depriving immigrant and minority communities of vital services like access to banking, health care, and food. Facebook approved the ads immediately, ProPublica says.